Insights

An Overview of Large Language Models and Fine-Tuning

The emergence of Large Language Models (LLMs) like Chat-GPT has propelled Artificial Intelligence (AI) into the spotlight, capturing the interest of many organizations. AI’s impact on people globally is undeniable, and its influence is set to grow in the years to come. With substantial investments directed towards AI research, advancements are occurring at a remarkable pace. Developments that seemed impossible just months ago are now achievable, while future capabilities are rapidly approaching.

In recent months, a focal point of research has been the fine-tuning of LLMs. Throughout my three-month internship at Squadra Machine Learning Company, I concentrated on this subject. My primary goal was to refine a generative model so it could accurately extract features and their corresponding values from product descriptions. Previously, Named Entity Recognition (NER) was used for this task, but it yielded insufficient results. Given the impressive capabilities of LLMs in various Natural Language Processing (NLP) tasks now, it should be feasible to utilize them for feature extraction. In this blog, I will detail the steps I took to fine-tune a generative model for this objective.

So, what is the purpose of fine-tuning a generative model? Fine-tuning a pre-trained generative model customizes it to excel at specific tasks. While base models are trained on a diverse array of texts and perform well in general queries and conversations, they may struggle with the more complex task of extracting features from product descriptions. Fine-tuning addresses this by training the model to perform a particular task effectively while retaining the original model’s knowledge.

Selecting a Model

The first step involved choosing a pre-trained model for fine-tuning. With thousands of open-source models available, each offering unique strengths and weaknesses, this selection process is crucial. A goal during my internship was to conduct all work on a consumer-grade GPU (16GB), which limited my options as many models are too large for this capacity. However, with certain strategies—which I will elaborate on later—I discovered that I could fine-tune models with up to 7 billion parameters. In contrast, OpenAI’s GPT-4 consists of 1.76 trillion parameters. The performance of these ‘smaller’ LLMs has significantly improved recently, and numerous top-performing models are now accessible for public use. The Hugging Face platform showcases many of these models. Hugging Face has also developed various tools to assist developers in building such applications. At the start of my internship, I identified two 7-billion parameter models, Mistral Ai’s Mistral-7b and Meta’s Llama2-7b, as the best candidates for fine-tuning.

Assembling a Dataset

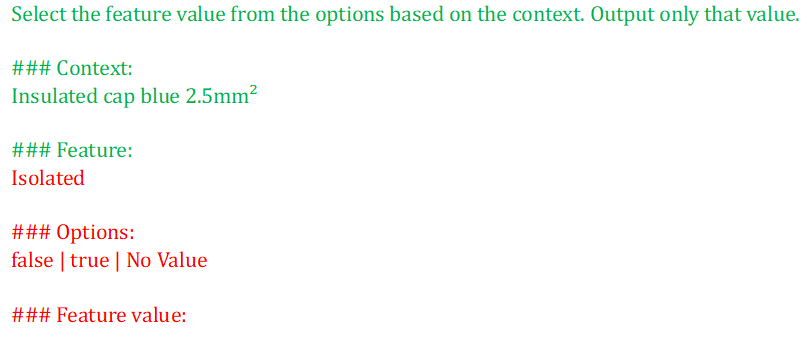

To facilitate model training, it is essential to provide examples of desired outputs, allowing for proper weight adjustments. I created a dataset formatted accordingly and divided it into training, validation, and test sets. After experimenting, I finalized my prompt format as shown:

Incorporating hashtags in the training prompt serves as a delimiter, aiding the model in learning the prompt structure more effectively. This method yielded excellent results for my application.

Fine-Tuning Process

Loading the pre-trained model is the first step in the fine-tuning process. Running a 7-billion parameter model in full precision requires approximately 28GB of GPU RAM. To prevent out-of-memory errors, model loading can be optimized through quantization, which reduces its size. Using the bitsandbytes library, one can load a model with either 8-bit or 4-bit precision, with 4-bit quantization needing around 7GB of RAM for the model, activations, and attention cache, which is manageable on a consumer GPU. Once the model is loaded, a tokenizer must also be created. Tokenizers, which can also be accessed through Hugging Face, ensure that the model receives inputs in the correct format. Initial prompts are tokenized into sub-words that are then converted to IDs. The tokenizer additionally adds padding tokens, ensuring inputs are uniform in length—crucial for training with multiple prompts of varying lengths. Following the tokenization process, fine-tuning can commence. However, fine-tuning all 7 billion parameters demands considerable computational resources and time, rendering it impractical on a consumer GPU. One way to mitigate the resource requirements is by employing the Parameter Efficient Fine-Tuning (PEFT) library, particularly through Low-Rank Adaptation (LoRA). LoRA freezes the network’s original weights and only retrains a small fraction of them. After configuring LoRA, the training data is fed into the model via the Trainer class provided by Hugging Face. The resulting adapter model can then be stacked onto the original model, achieving performance comparable to a fully fine-tuned model. Conducting thorough hyperparameter tuning proved challenging because verifying validation loss for multiple settings would be too time-consuming, necessitating a more experimental, hands-on approach.

Performance Outcomes

I designed a test set mirroring the training set, containing 50% of features with values and 50% without. The results from this method demonstrated that the best-performing models achieved up to 94% accuracy on an independent test set, with approximately 8% of features being incorrectly identified and around 4% of instances where the model should have indicated no value mistakenly providing one. These outcomes surpass those produced by the earlier NER method, which achieved around 80% accuracy. Interestingly, I found that achieving these results did not require extensive training data; about 100 training examples sufficed for my fine-tuning efforts. However, a significant drawback was that the resultant model’s speed was inadequate for practical use, taking roughly two seconds to predict each feature. Consequently, predicting 50 features would entail approximately two and a half minutes for processing.

Enhancing Inference Speed

The primary cause of the lengthy prediction time per feature is the necessity for the model to process the entire prompt before determining the feature value, which is lengthy for a single feature. To minimize the number of characters the model must analyze each time, I implemented hidden state caching during inference. This tool is effective when the inference prompts share a similar sequence at the start. By applying hidden state caching, a forward pass through the model can be conducted on the initial part of the prompt (shown in green), saving the hidden states; thereafter, for each feature, the model only processes the remaining text (shown in red), effectively halving the reading duration.

Following this, I also trained a model to enable predictions for multiple prompts simultaneously, which synergizes well with hidden state caching, as only the green text expands while the red text remains constant. This approach showed promise, but further testing is required to assess the actual increase in inference speed and any potential accuracy trade-offs.

Additionally, I explored utilizing smaller models. Several months into my internship, 3-billion parameter models gained popularity and demonstrated comparable performance to various 7-billion parameter models. I experimented with Microsoft’s phi-2 model and achieved results similar to those of the Mistral-7b model, while also effectively doubling inference speed compared to the larger models. My final exploration involved testing the vLLM library, said to enhance inference speed by a factor of 20 compared to my existing approach. Unfortunately, the 3-billion parameter model I was working with was not yet compatible with vLLM at my internship’s conclusion, so I could not evaluate this option. In summary, while feature extraction via fine-tuned generative models is feasible, the current speed limitations render them impractical for real-world application. My experience highlighted that while there is substantial research devoted to model development, implementation aspects require more focus.

Mik van der Drift

Intern Data Science at Squadra Machine Learning Company